UI / Driven by

Gen AI CLIP/

GenAI LMM/

1.8 Trillion

Cloud Parameters

[Input Prompt] →

[Text Encoder (CLIP)] →

[Latent Space] →

[Diffusion Process] →

[Image Decoder]

[Image Output]

UI / Driven by

Gen AI CLIP/

GenAI LMM/

1.8 Trillion

Cloud Parameters

[Input Prompt] →

[Text Encoder (CLIP)] →

[Latent Space] →

[Diffusion Process] →

[Image Decoder]

[Image Output]

UI / Driven by

Gen AI CLIP/.™

Gen AI LMMs/

1.8 Trillion

Cloud Parameters

[Input Prompt] →

[Text Encoder (CLIP)] →

[Latent Space] →

[Diffusion Process] →

[Image Decoder]

Sci-Fi Warrior [Image Output]

1.8 Trillion/

Cloud-Based

Parameters

Powering Gen AI | LMM

[Input Prompt] →

[Text Encoder (CLIP)] →

[Latent Space] →

[Diffusion Process] →

[Image Decoder]

Sci-Fi Warrior [Image Output]

Gen AI LMMs/

Trained

on 1.8 Trillion

Cloud-Based Parameters

[Input Prompt Engineering] →

[Text Encoder (CLIP)] →

Transformers [Latent Space] →

[Diffusion Process] →

Perceptrons [Image Decoder] →

Warrior [Image Output]

UI / Driven by

Gen AI CLIP/.™

Gen AI LMMs/

1.8 Trillion

Cloud Parameters

[Input Prompt] →

[Text Encoder (CLIP)] →

[Latent Space] →

[Diffusion Process] →

[Image Decoder]

Sci-Fi Warrior [Image Output]

1.8 Trillion/

Cloud-Based

Parameters

Powering Gen AI | LMM

[Input Prompt] →

[Text Encoder (CLIP)] →

[Latent Space] →

[Diffusion Process] →

[Image Decoder]

Sci-Fi Warrior [Image Output]

Gen AI LMMs/

Trained

on 1.8 Trillion

Cloud-Based Parameters

[Input Prompt Engineering] →

[Text Encoder (CLIP)] →

Transformers [Latent Space] →

[Diffusion Process] →

Perceptrons [Image Decoder] →

Warrior [Image Output]

{ "Attention Is All You Need" }

{ "Attention Is All You Need" }

{ "Attention Is

All You Need" }

In the rapidly evolving field of artificial intelligence, mastering prompt design has become a critical skill to set your ideas free. Whether you’re a content creator, developer, business professional, or AI enthusiast, understanding how to craft the perfect prompt can dramatically improve your results when working with AI models.

In the rapidly evolving field of artificial intelligence, mastering prompt design has become a critical skill to set your ideas free. Whether you’re a content creator, developer, business professional, or AI enthusiast, understanding how to craft the perfect prompt

can dramatically improve your results

when working with AI models.

In the rapidly evolving field of artificial intelligence, mastering prompt design has

become a critical skill

to set your ideas free.

Whether you’re a content creator, developer, business professional, or AI enthusiast, understanding how to craft the perfect prompt can dramatically improve your results when working with AI models.

Introducing

the LMArena

for The World's

Most Powerful

Gen AI LMM

Introducing

the LMArena for

The World's

Most Powerful

Gen AI LMM.

In the land of limitless models, every launch is ‘revolutionary’—until next week’s ‘most powerful’ shows up with shinier slides and bigger multi-modal benchmarks from The Humanity's Last Exam [HLE] 👽

w/ over 2.5K academic questions.

One LMM to rule them all? Apparently, every model got the blue memo—turns out the only thing more inflated than their parameters is their self-esteem.

Generative

AI LMMs

A subfield of artificial intelligence that uses generative models to produce text, images, videos, or other forms

of data.

Generative

AI-LMMs

A subfield of artificial intelligence that uses generative models to produce text, images, videos, or other forms of data.

Generative AI LMM has made its appearance in a wide variety of industries, radically changing the dynamics of content creation, analysis, and delivery.

In healthcare, generative AI is instrumental in accelerating drug discovery by creating molecular structures with target characteristics and generating radiology images for training diagnostic models.

This extraordinary ability not only enables faster and cheaper development but also enhances medical decision-making. In finance, generative AI is invaluable as it generates datasets to train models and automates report generation with natural language summarization capabilities.

It automates content creation, produces synthetic financial data, and tailors customer communications. It also powers chatbots and virtual agents. Collectively, these technologies enhance efficiency, reduce operational costs, and support data-driven decision-making in financial institutions.

The media industry makes use of generative AI for numerous creative activities such as music composition, scriptwriting, video editing, and digital art. The educational sector is impacted as well, since the tools make learning personalized through creating quizzes, study aids, and essay composition. Both the teachers and the learners benefit from AI-based platforms that suit various learning patterns.

Large multi-modal models [LMMs] The rise of these models can be attributed to several key factors. Firstly, the availability of massive amounts of multi-modal data, such as image-text pairs from the web, has enabled the training of models on an unprecedented scale.

Secondly: the development of powerful deep learning architectures, such as Transformers, has allowed for the efficient processing and fusion of information from multiple modalities, determined for their multi-dimensional media [basically, LLMs on steroids.]

Large language models [LLMs] The rise of multi-modal large models can be attributed to several key factors. Firstly, the availability of massive amounts of multi-modal data, such as image-text pairs from the web, has enabled the training of models on an unprecedented scale. Secondly, the development of powerful deep learning architectures, such as Transformers, has allowed for the efficient processing and fusion of information from multiple modalities.

Computational Resources Training and running large multimodal models require significant computational resources, making them expensive and less accessible for smaller organizations or independent researchers. Interpretability and explainability: As with a complex AI model, understanding how these models make decisions can be difficult.

Large language models [LLMs] The rise of multi-modal large models can be attributed to several key factors. Firstly, the availability of massive amounts of multi-modal data, such as image-text pairs from the web, has enabled the training of models on an unprecedented scale. Secondly, the development of powerful deep learning architectures, such as Transformers, has allowed for the efficient processing and fusion of information from multiple modalities.

Computational Resources Training and running large multimodal models require significant computational resources, making them expensive and less accessible for smaller organizations or independent researchers. Interpretability and explainability: As with a complex AI model, understanding how these models make decisions can be difficult.

Finally, consistency with Apple’s Foundational Multi-Modal Model ensures that the user interface (app) feels familiar within the Apple TV ecosystem while maintaining its own brand identity.

Gen AI

Tokens

{256,000}

Parameters

{1.7 – 1.8 Trillion}

Language Corpus

{70% Tr Data + 30% Testing Data}

Gen AI

Tokens

{256,000}

Parameters

{1.7 - 1.8 Trillion}

Language Corpus

{70% Tr Data + 30% Testing Data}

Gen AI

Tokens

{256,000}

Parameters

{1.7 - 1.8 Trillion}

Language Corpus

{70% Tr Data + 30% Testing Data}

{LLM/LMM-based Navigation UI}

LLM/LMM-based Navigation UI.

{LLM/LMM-based Navigation UI}

A large language model [LLM] is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation all currently combined into a poly-structured

Large Multi-modal Model [LMM] with Contrastive Language-Image Pre-training [CLIP.]

A large language model [LLM] is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation all currently combined into a poly-structured

Large Multi-modal Model [LMM] with

Contrastive Language-Image Pre-training [CLIP.]

A large language model [LLM] is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation all currently combined into

a poly-structured

Large Multi-modal Model [LMM]

with Contrastive Language-Image

Pre-training [CLIP.]

Tokenization

I trust tokenization to segment and encode data for precise language modeling and semantic understanding.

Tokenization

{

01

}

70/30 Corpus

{

02

}

ND Vectors CB

{

03

}

I/O RLHF/CLIP

{

04

}

Neural-Net

{

05

}

UX/UI Patterns

{

06

}

UX/UI Patterns

for Designing

LARGE

multi-modal models

of Gen AI experiences.

UX/UI Patterns

for Designing

LARGE

multi-modal models

of Gen AI experiences.

UX/UI Patterns

for Designing

LARGE

multi-modal models

of Gen AI experiences.

UI/Gen AI

2023

UI Patterns driven by Gen AI

Methods

Technology Guidelines for building user-centric Interfaces.

AI or not AI

<User-Centered Design Foundation/>®

UX Needs

</method to ask>

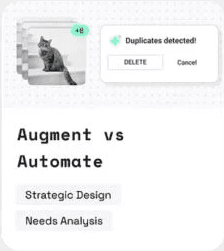

Ar vs Auto

</method to ask>

Levels

</method to ask>

Sources

</method to ask>

Adoption

</method to ask>

_

<User-Centered Design Foundation/>®

UI/Gen AI

2023

UI Patterns driven by Gen AI

Methods

Technology Guidelines for building user-centric Interfaces.

AI

<User-Centered Design Foundation/>®

AI

<User-Centered Design Foundation/>®

Needs

</method to ask>

Needs

</method to ask>

Ar

</method to ask>

Ar

</method to ask>

Levels

</method to ask>

Levels

</method to ask>

Sources

</method to ask>

Sources

</method to ask>

Adoption

</method to ask>

Adoption

</method to ask>

_

<User-Centered Design Foundation/>®

Interfaces

for the Age of

Interfaces

for the Age of

Interfaces

for the Age of

Intelligent Systems

Intelligent Systems

Intelligent Systems

As AI systems grow more dynamic and probabilistic, we’re shifting away from static interfaces toward adaptive, intelligent experiences. This evolution demands a new design language—one grounded in transparency, trust, and flexibility.

As AI systems grow more dynamic and probabilistic, we’re shifting away from static interfaces toward adaptive, intelligent experiences. This evolution demands a new design language—one grounded in transparency, trust, and flexibility.

As AI systems grow more dynamic and probabilistic, we’re shifting away from static interfaces toward adaptive, intelligent experiences. This evolution demands a new design language—one grounded in transparency, trust, and flexibility.

Gen AI/HCI

UX/UI Design & Development

2023

15+ next-gen UI patterns engineered to power human-centered design in Generative AI, LMMs, and LLMs—where interface meets intelligence.

AI or not AI

<User-Centered Design Foundation/>®

UX Needs

<User-Centered Design Foundation/>®

Ar vs Auto

<User-Centered Design Foundation/>®

Levels

<User-Centered Design Foundation/>®

Sources

<User-Centered Design Foundation/>®

Adoption

<User-Centered Design Foundation/>®

Models

<User-Centered Design Foundation/>®

Trust

<User-Centered Design Foundation/>®

Chains

<User-Centered Design Foundation/>®

Multi I/O

<User-Centered Design Foundation/>®

Recall

<User-Centered Design Foundation/>®

Privacy

<User-Centered Design Foundation/>®

Parameters

<User-Centered Design Foundation/>®

Co-Editing

<User-Centered Design Foundation/>®

Automation

<User-Centered Design Foundation/>®

I/O Errors

<User-Centered Design Foundation/>®

Feedback

<User-Centered Design Foundation/>®

Evaluation

<User-Centered Design Foundation/>®

For designers building AI-first products, it’s crucial to consider the interaction contract between humans and machines. Whether you’re working with chat-bots, generative tools, or autonomous agents, thoughtful interface design can transform AI from a black box into a truly empowering experience.

For designers building AI-first products, it’s crucial to consider the interaction contract between humans and machines. Whether you’re working with chat-bots, generative tools, or autonomous agents, thoughtful interface design can transform AI from a black box into a truly empowering experience.

For designers building AI-first products, it’s crucial to consider the interaction contract between humans and machines. Whether you’re working with chat-bots, generative tools, or autonomous agents, thoughtful interface design can transform AI from a black box into a truly empowering experience.

.

1

3

Helping brands standout for over two decades.

Helping brands standout for over two decades.

Ready to speak UX/UI design & development?

Lets talk

UX/UI Design & Development Studio

(Based in Indianapolis, IN)

2:59:31 AM UTC

39°52'14.2"N 86°08'45.1"W

© The Apple logo & iOS devices rendered here are trademarks of Apple Inc., registered in the U.S. & + countries.

Helping brands standout for over two decades.

Helping brands standout for over two decades.

Ready to speak UX/UI design & development?

Lets talk

UX/UI Design & Development Studio

(Based in Indianapolis, IN)

2:59:31 AM UTC

39°52'14.2"N 86°08'45.1"W

© The Apple logo & iOS devices rendered here are trademarks of Apple Inc., registered in the U.S. & + countries.